Kamera kalibrieren.

diesem Tutorial folgend: http://wiki.ros.org/camera_calibration/Tutorials/MonocularCalibration

Zuerst druckt man das Schwarzweiß-Muster aus (Checkerboard als PDF)

Die Kantenlänge der Quadrate beträgt 25mm, auf meinem Drucker.

Nun die Software installieren:

ACHTUNG: funktioniert unter Melodic ohne Änderungen, unter Noetic bzw Ubuntu Focal Fossa ist Python2 standardmäßig nicht installiert. Im Header von cameracalibrator.py steht allerdings ein Shebang !/usr/bin/python.

Das Problem lässt sich durch einen symbolischen Link lösen

sudo ln -s /usr/bin/python3 /usr/bin/python

Dann kann man das Kalibratorpaket installieren. Entweder über

rosdep install camera_calibration

Oder über den Paketmanager

sudo apt-get install ros-noetic-camera-calibration

Die Kalibrierung startet man mit

root@raspberrypi:~# rosrun camera_calibration cameracalibrator.py --size 8x6 --square 0.025 image:=/esp32cam/image camera:=camera

Allerdings gibt es eine Fehlermeldung, da standardmäßig kein Infomanager für die Kamera läuft.

Waiting for Service /camera/ser_camera_info ...

Service not found

Entweder man lässt camera:=camera weg oder stellt einfach --no-service-check hinten dran

Wenn das Kalibiertool läuft, hält man das ausgedruckte Schwarzweiß-Muster (A4 auf Platistdeckel geklebt) vor die Kamera und

root@raspberrypi:~# rosrun camera_calibration cameracalibrator.py --size 8x6 --square 0.025 image:=/esp32cam/image camera:=camera --no-service-check

(display:405): GLib-GObject-CRITICAL **: 07:11:26.494: g_object_unref: assertion 'G_IS_OBJECT (object)' failed

*** Added sample 1, p_x = 0.461, p_y = 0.397, p_size = 0.482, skew = 0.485

*** Added sample 2, p_x = 0.420, p_y = 0.420, p_size = 0.503, skew = 0.338

*** Added sample 3, p_x = 0.343, p_y = 0.352, p_size = 0.520, skew = 0.266

*** Added sample 4, p_x = 0.416, p_y = 0.558, p_size = 0.420, skew = 0.021

*** Added sample 5, p_x = 0.551, p_y = 0.465, p_size = 0.350, skew = 0.023

*** Added sample 6, p_x = 0.662, p_y = 0.501, p_size = 0.401, skew = 0.069

*** Added sample 7, p_x = 0.587, p_y = 0.685, p_size = 0.536, skew = 0.048

*** Added sample 8, p_x = 0.646, p_y = 0.565, p_size = 0.595, skew = 0.087

*** Added sample 9, p_x = 0.683, p_y = 0.433, p_size = 0.617, skew = 0.141

*** Added sample 10, p_x = 0.464, p_y = 0.473, p_size = 0.653, skew = 0.041

*** Added sample 11, p_x = 0.390, p_y = 0.372, p_size = 0.633, skew = 0.049

*** Added sample 12, p_x = 0.818, p_y = 0.442, p_size = 0.321, skew = 0.168

*** Added sample 13, p_x = 0.278, p_y = 0.385, p_size = 0.402, skew = 0.148

*** Added sample 14, p_x = 0.364, p_y = 0.426, p_size = 0.370, skew = 0.227

*** Added sample 15, p_x = 0.750, p_y = 0.362, p_size = 0.412, skew = 0.077

*** Added sample 16, p_x = 0.888, p_y = 0.346, p_size = 0.426, skew = 0.144

*** Added sample 17, p_x = 0.606, p_y = 0.347, p_size = 0.429, skew = 0.031

*** Added sample 18, p_x = 0.257, p_y = 0.314, p_size = 0.449, skew = 0.075

*** Added sample 19, p_x = 0.478, p_y = 0.230, p_size = 0.475, skew = 0.039

*** Added sample 20, p_x = 0.464, p_y = 0.117, p_size = 0.533, skew = 0.106

*** Added sample 21, p_x = 0.757, p_y = 0.685, p_size = 0.369, skew = 0.032

*** Added sample 22, p_x = 0.812, p_y = 0.711, p_size = 0.501, skew = 0.081

*** Added sample 23, p_x = 0.756, p_y = 0.142, p_size = 0.526, skew = 0.133

*** Added sample 24, p_x = 0.292, p_y = 0.410, p_size = 0.550, skew = 0.050

*** Added sample 25, p_x = 0.319, p_y = 0.534, p_size = 0.509, skew = 0.035

*** Added sample 26, p_x = 0.117, p_y = 0.463, p_size = 0.495, skew = 0.043

*** Added sample 27, p_x = 0.123, p_y = 0.198, p_size = 0.465, skew = 0.079

*** Added sample 28, p_x = 0.230, p_y = 0.045, p_size = 0.444, skew = 0.067

*** Added sample 29, p_x = 0.265, p_y = 0.142, p_size = 0.483, skew = 0.036

*** Added sample 30, p_x = 0.346, p_y = 0.726, p_size = 0.448, skew = 0.068

*** Added sample 31, p_x = 0.505, p_y = 0.600, p_size = 0.464, skew = 0.135

*** Added sample 32, p_x = 0.357, p_y = 0.000, p_size = 0.541, skew = 0.034

*** Added sample 33, p_x = 0.257, p_y = 0.734, p_size = 0.604, skew = 0.058

*** Added sample 34, p_x = 0.179, p_y = 0.522, p_size = 0.574, skew = 0.008

*** Added sample 35, p_x = 0.403, p_y = 0.734, p_size = 0.530, skew = 0.348

*** Added sample 36, p_x = 0.654, p_y = 0.657, p_size = 0.542, skew = 0.278

*** Added sample 37, p_x = 0.575, p_y = 0.501, p_size = 0.486, skew = 0.237

*** Added sample 38, p_x = 0.525, p_y = 0.382, p_size = 0.494, skew = 0.273

*** Added sample 39, p_x = 0.334, p_y = 0.662, p_size = 0.567, skew = 0.110

*** Added sample 40, p_x = 0.528, p_y = 0.743, p_size = 0.394, skew = 0.030

*** Added sample 41, p_x = 0.614, p_y = 0.721, p_size = 0.291, skew = 0.045

**** Calibrating ****

*** Added sample 42, p_x = 0.660, p_y = 0.725, p_size = 0.410, skew = 0.128

D = [0.15022134806040208, -0.4230954242051678, 0.01752132842705994, -0.005663731092300748, 0.0]

K = [835.8540789959028, 0.0, 421.64641207176396, 0.0, 838.3777899657703, 356.8153829294532, 0.0, 0.0, 1.0]

R = [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P = [850.7354125976562, 0.0, 418.90434027338415, 0.0, 0.0, 847.2274780273438, 363.77392418121417, 0.0, 0.0, 0.0, 1.0, 0.0]

None

# oST version 5.0 parameters

[image]

width

800

height

600

[narrow_stereo]

camera matrix

835.854079 0.000000 421.646412

0.000000 838.377790 356.815383

0.000000 0.000000 1.000000

distortion

0.150221 -0.423095 0.017521 -0.005664 0.000000

rectification

1.000000 0.000000 0.000000

0.000000 1.000000 0.000000

0.000000 0.000000 1.000000

projection

850.735413 0.000000 418.904340 0.000000

0.000000 847.227478 363.773924 0.000000

0.000000 0.000000 1.000000 0.000000

('Wrote calibration data to', '/tmp/calibrationdata.tar.gz')

('Wrote calibration data to', '/tmp/calibrationdata.tar.gz')

('Wrote calibration data to', '/tmp/calibrationdata.tar.gz')

^Croot@raspberrypi:~# ls /tmp/calibrationdata.tar.gz

/tmp/calibrationdata.tar.gz

root@raspberrypi:~# ls -al /tmp/calibrationdata.tar.gz

-rw-r--r-- 1 root root 4523291 Aug 15 07:25 /tmp/calibrationdata.tar.gz

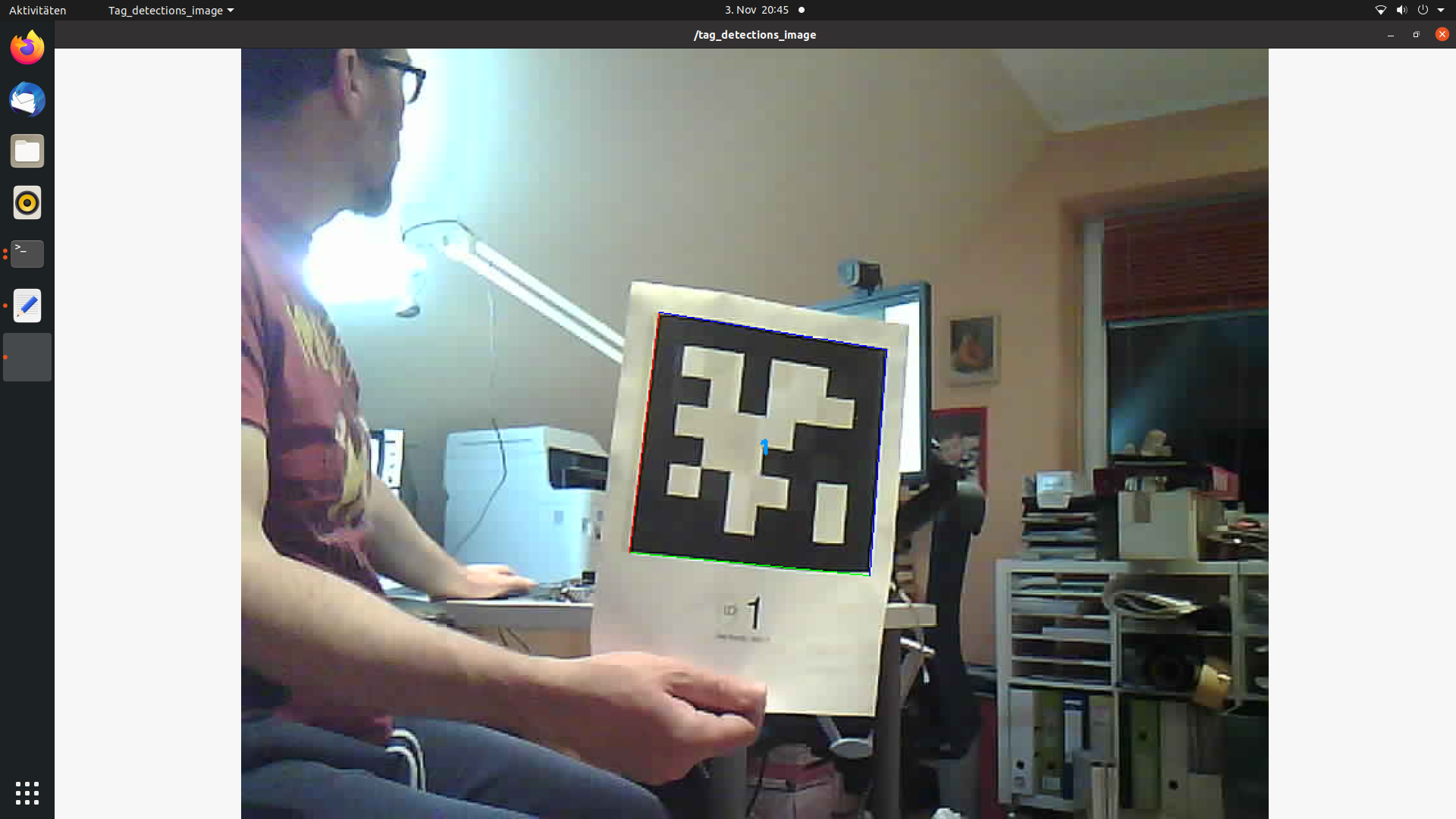

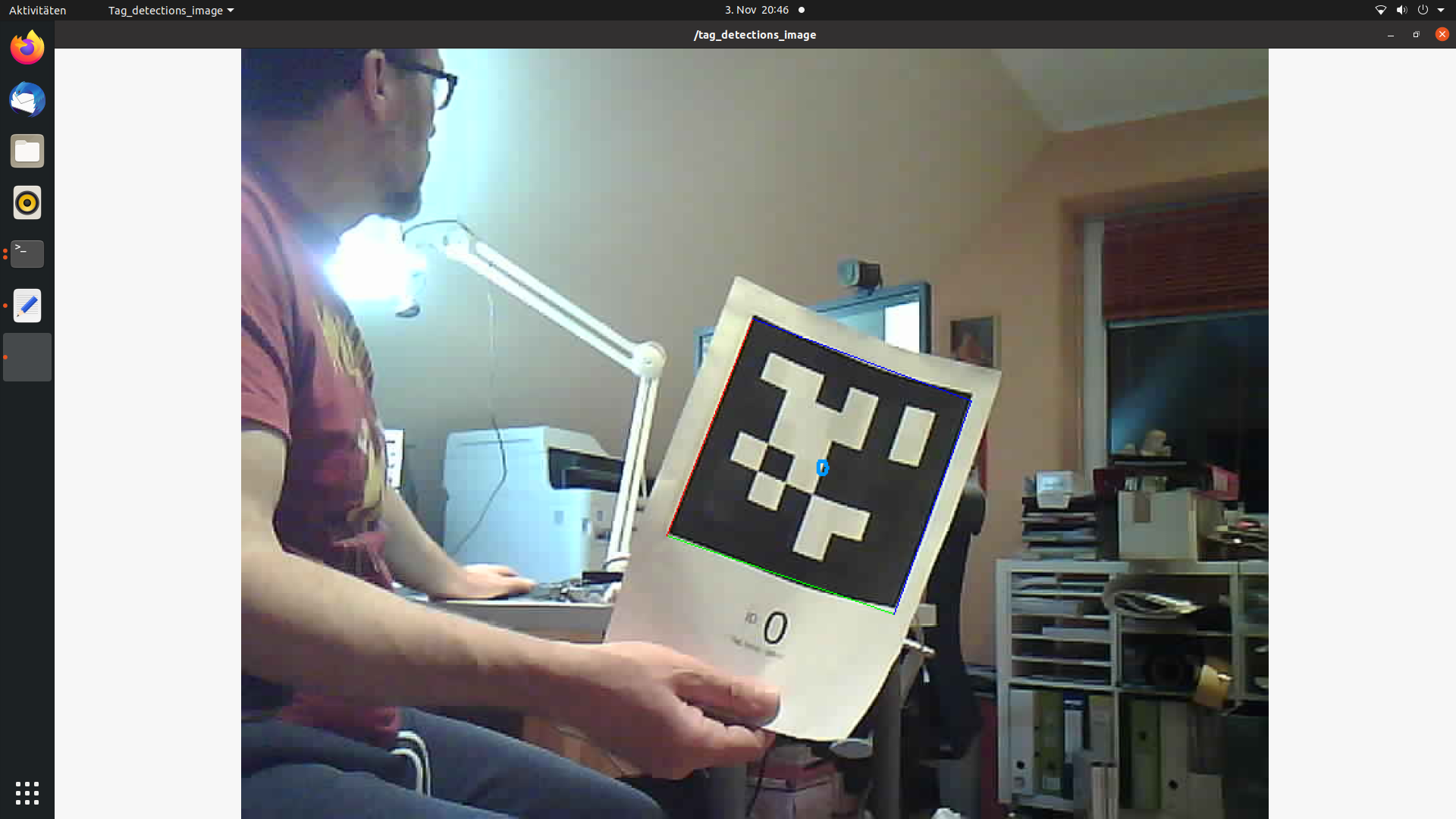

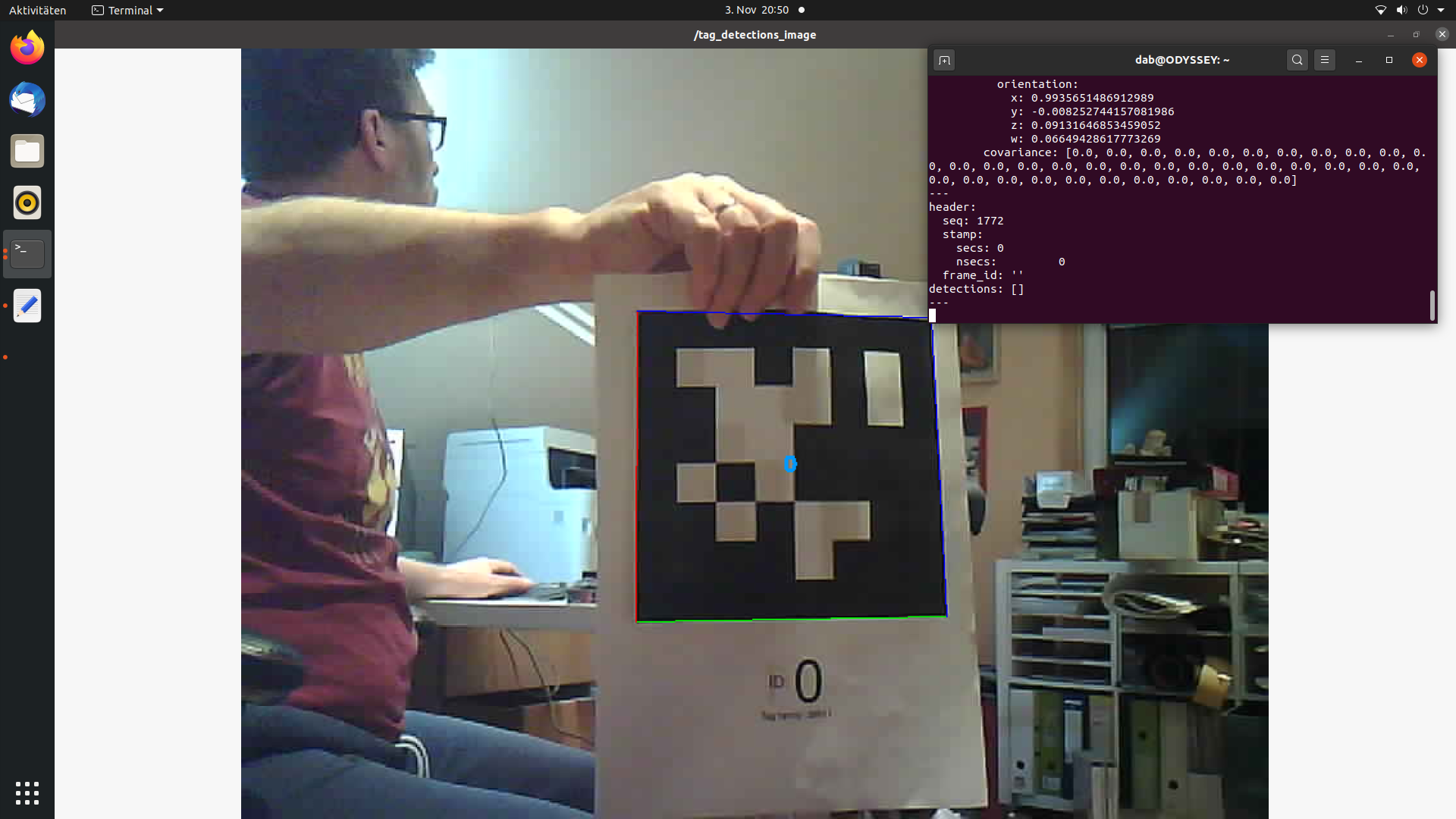

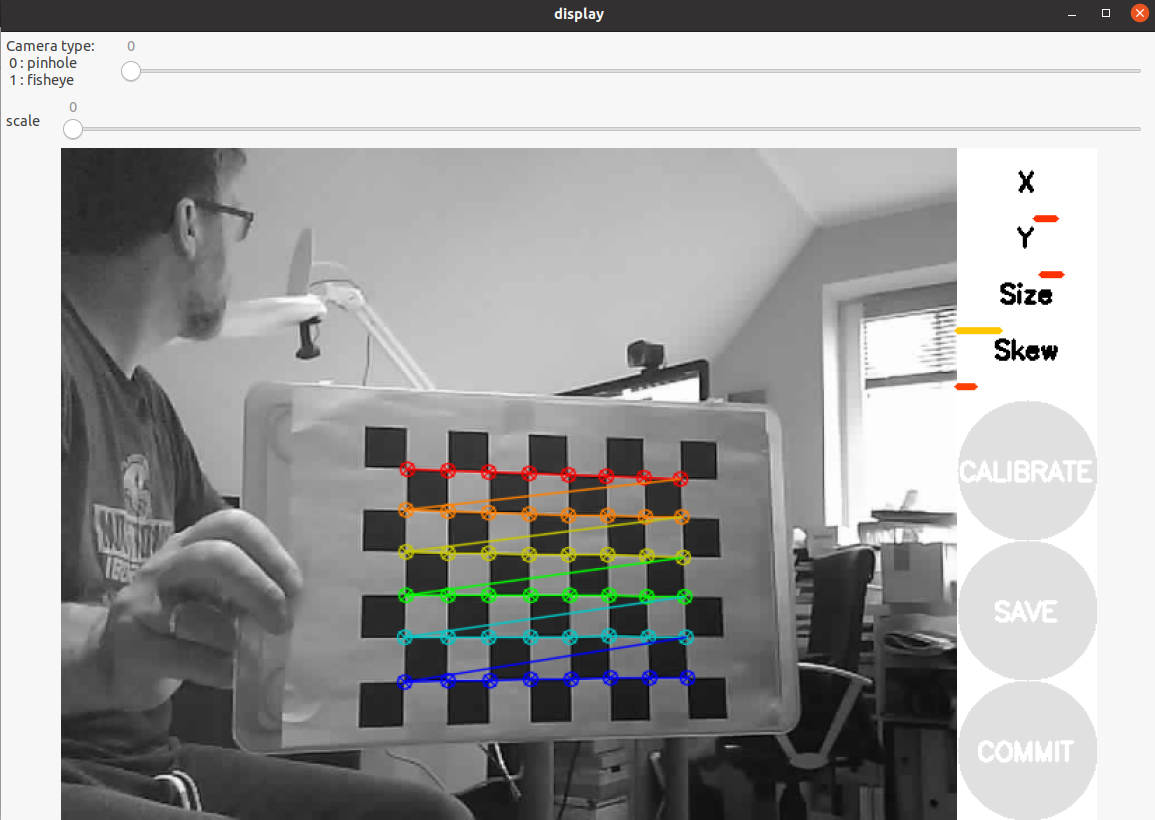

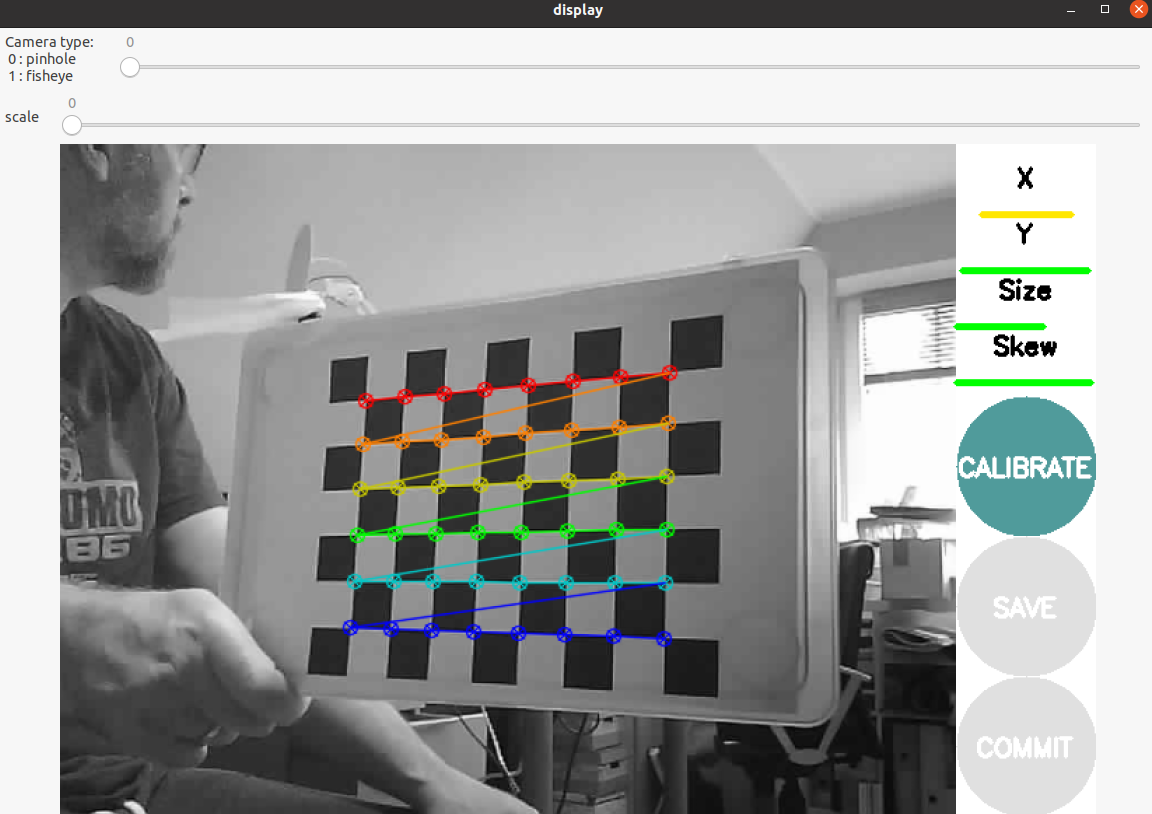

So sieht das Bild aus.

Das Kalibrierungstool hat das Muster erkannt. Manchmal ist es zu dunkel dafür.

Nun muss man das Bild von oben nach unten und von links nach rechts sowie von vorne nach hinten bewegen. Sobald das Tool jeweils genug Daten für eine Achse hat, geht der Balken (x,y, Skew) auf grün. Sind alle auf grün oder gelb, wird der Kalibrierbutton ebenfalls grün und man kann ihn anklicken.

Das Kalibrierungstool hat das Muster erkannt. Manchmal ist es zu dunkel dafür.

Nun muss man das Bild von oben nach unten und von links nach rechts sowie von vorne nach hinten bewegen. Sobald das Tool jeweils genug Daten für eine Achse hat, geht der Balken (x,y, Skew) auf grün. Sind alle auf grün oder gelb, wird der Kalibrierbutton ebenfalls grün und man kann ihn anklicken.

Mit Save kann man anschließend die Daten speichern. Dazu legt das Tool unter /tmp ein Archiv ab.

In dem TAR-Archiv sind die Kalibrierungsbilder gespeichert sowie die Dateien ost.yaml und ost.txt. ost.yaml benennt man in esp32cam.yaml um und speichert sie im Verzeichnos $ROS_HOME. Das ist im Pfad des Users (root/dab) der Ordner .ros

root@NAS:~/.ros# pwd

/root/.ros

root@NAS:~/.ros# ls

esp32cam.yaml roscore-11311.pid rospack_cache_02946445166995464026

log rosdep rospack_cache_14834868803710052660

Mit dem Befehl

root@NAS:~/catkin_ws# rosrun ros-esp32cam esp32cam_node

[ INFO] [1604006321.532070249]: camera calibration URL: file:///root/.ros/esp32cam.yaml

[ WARN] [1604006321.546284988]: Camera calibration file did not specify distortion model, assumin

````

startet man den Node, der das Bild und die Infodaten published.

Auf anderen Systemen kann man dann mit

````bash

rosrun image_view image_view image:=/esp32cam/image

den Stream anschauen